llms.txt is an emerging standard for providing instructions to AI crawlers. Similar to how robots.txt tells search engine bots what to crawl, llms.txt provides information specifically for AI systems like ChatGPT, Gemini, and Perplexity.

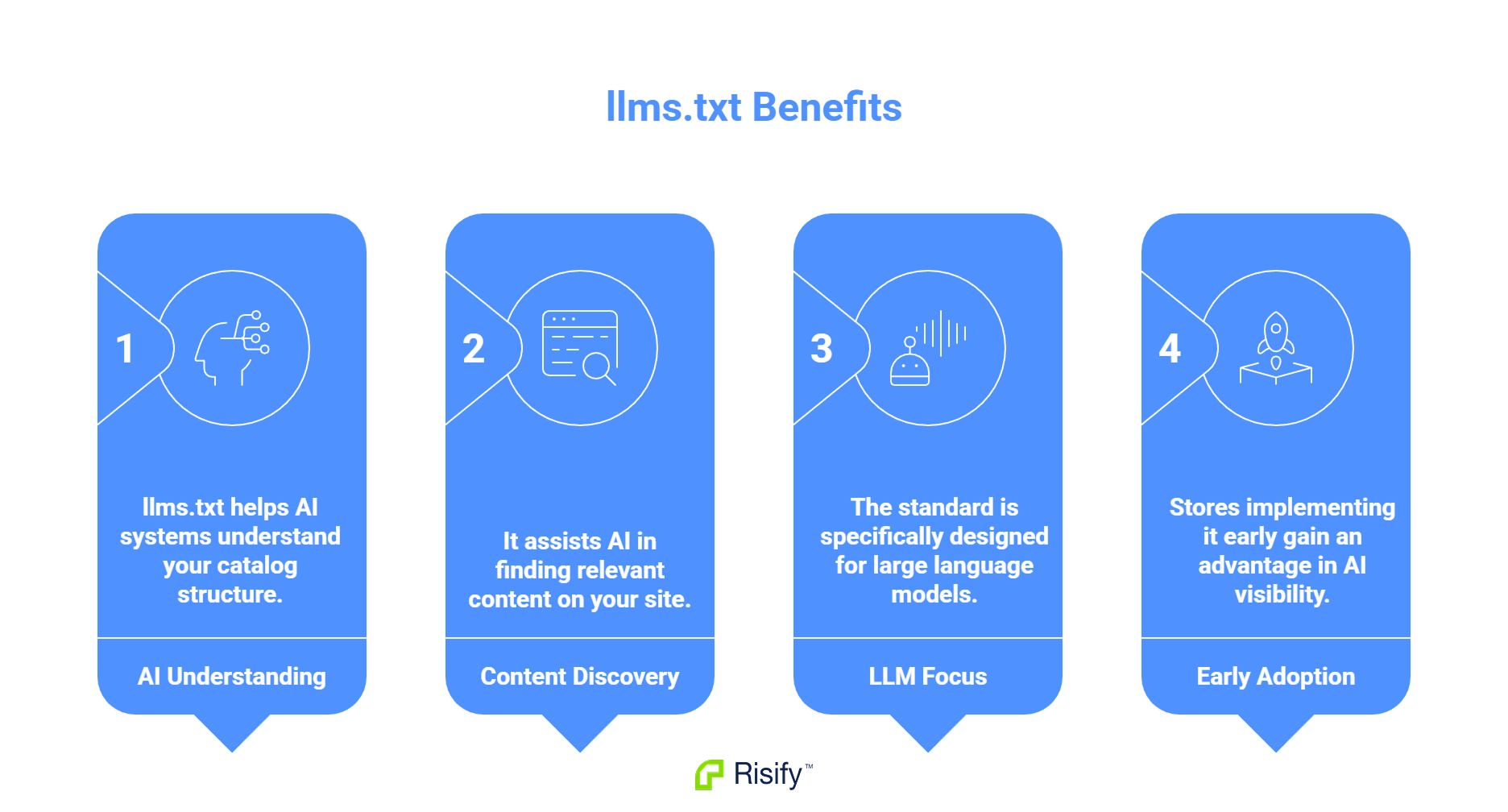

- llms.txt is a text file that provides AI-specific information about your site.

- It helps AI systems understand your catalog structure and find relevant content.

- The standard is designed for large language models, not traditional search engines.

- Stores that implement it early are easier for AI tools to find and recommend.

What llms.txt Is

llms.txt is a plain text file placed at the root of your website. It contains information formatted specifically for AI systems - descriptions of what your site contains, how your catalog is organized, and where to find key content.

The File Format

The file uses a simple, readable format that AI systems can parse easily. It typically includes:

- Site description: A summary of what your business sells and who it serves

- Catalog structure: How your products and collections are organized

- Key pages: Pointers to important content areas

- Content guidance: Context that helps AI systems interpret your site correctly

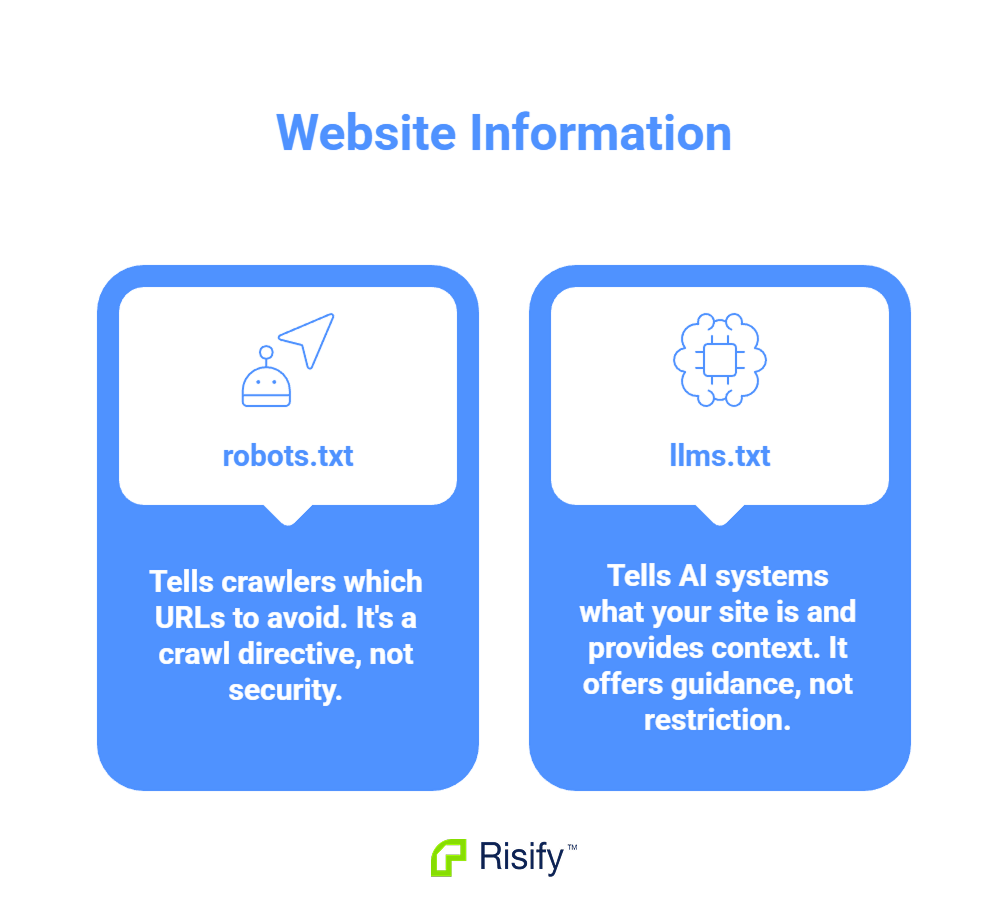

How It Differs from robots.txt

robots.txt tells crawlers which URLs they should not crawl. It is a crawl directive, not a security mechanism, and it does not describe your site. It only limits access to certain paths.

llms.txt does the opposite. It tells AI systems what your site IS and provides context to help them understand it. Rather than restricting access, it offers guidance.

Think of robots.txt as a "keep out" sign and llms.txt as a welcome guide that explains what visitors will find.

Why AI Tools Need Different Instructions Than Search Engines

Search engines and AI tools process websites differently. The instructions that help Google crawl your site efficiently do not address what AI systems need.

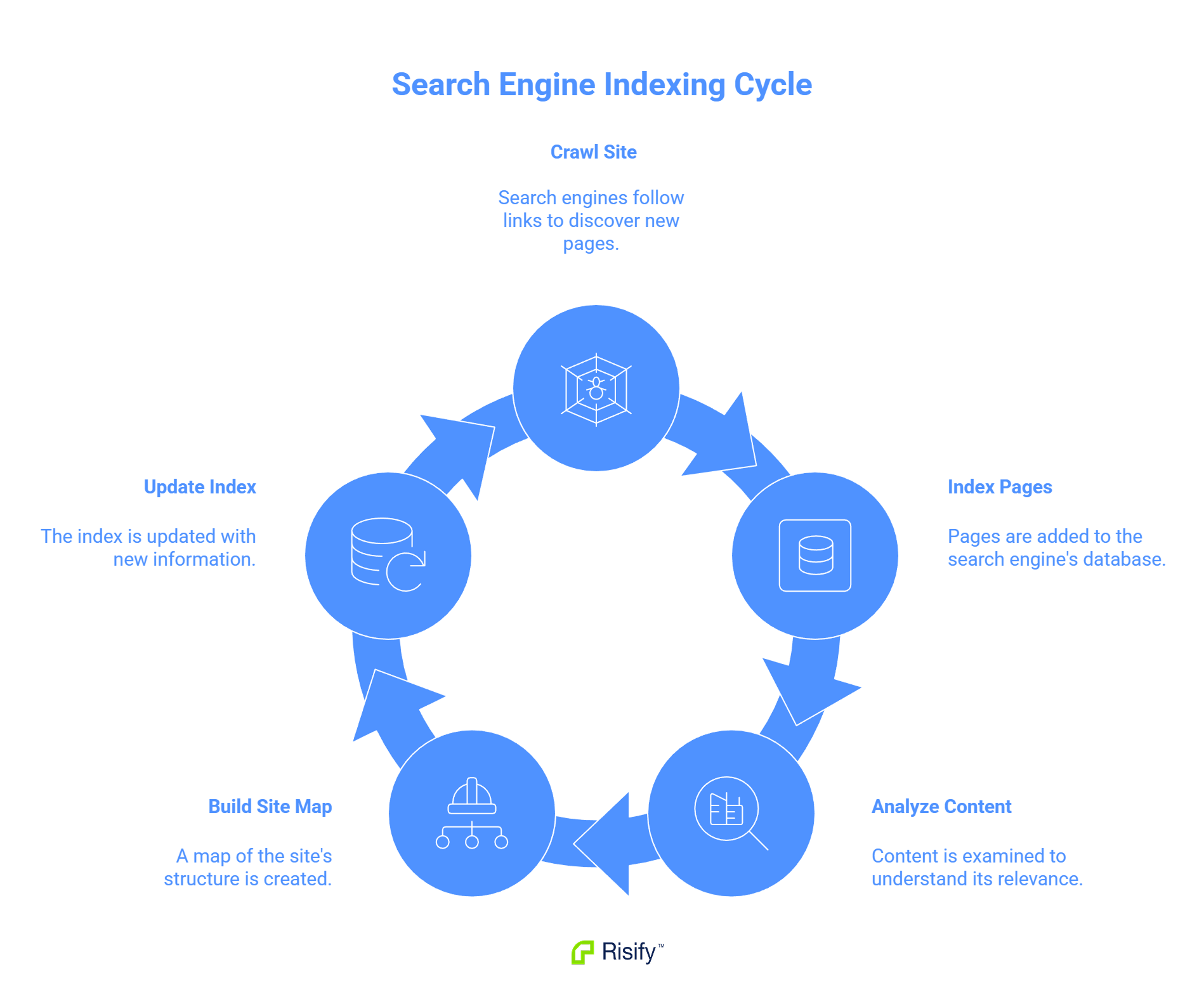

How Search Engines Work

Search engines like Google crawl your site by following links. They index pages, analyze content, and build a map of your site over time. This process happens continuously - Googlebot visits, records what it finds, and updates its index.

Traditional SEO files support this:

- robots.txt: Tells crawlers which pages to skip

- Sitemap.xml: Lists all pages and when they were updated

- Meta tags: Provide page-level information

These tools help search engines discover and index your content efficiently.

How AI Tools Work

AI shopping assistants and search tools work differently. They process content to answer specific user questions, often in real time. When a user asks ChatGPT for product recommendations, the AI needs to quickly understand what your store sells and whether your products match the query.

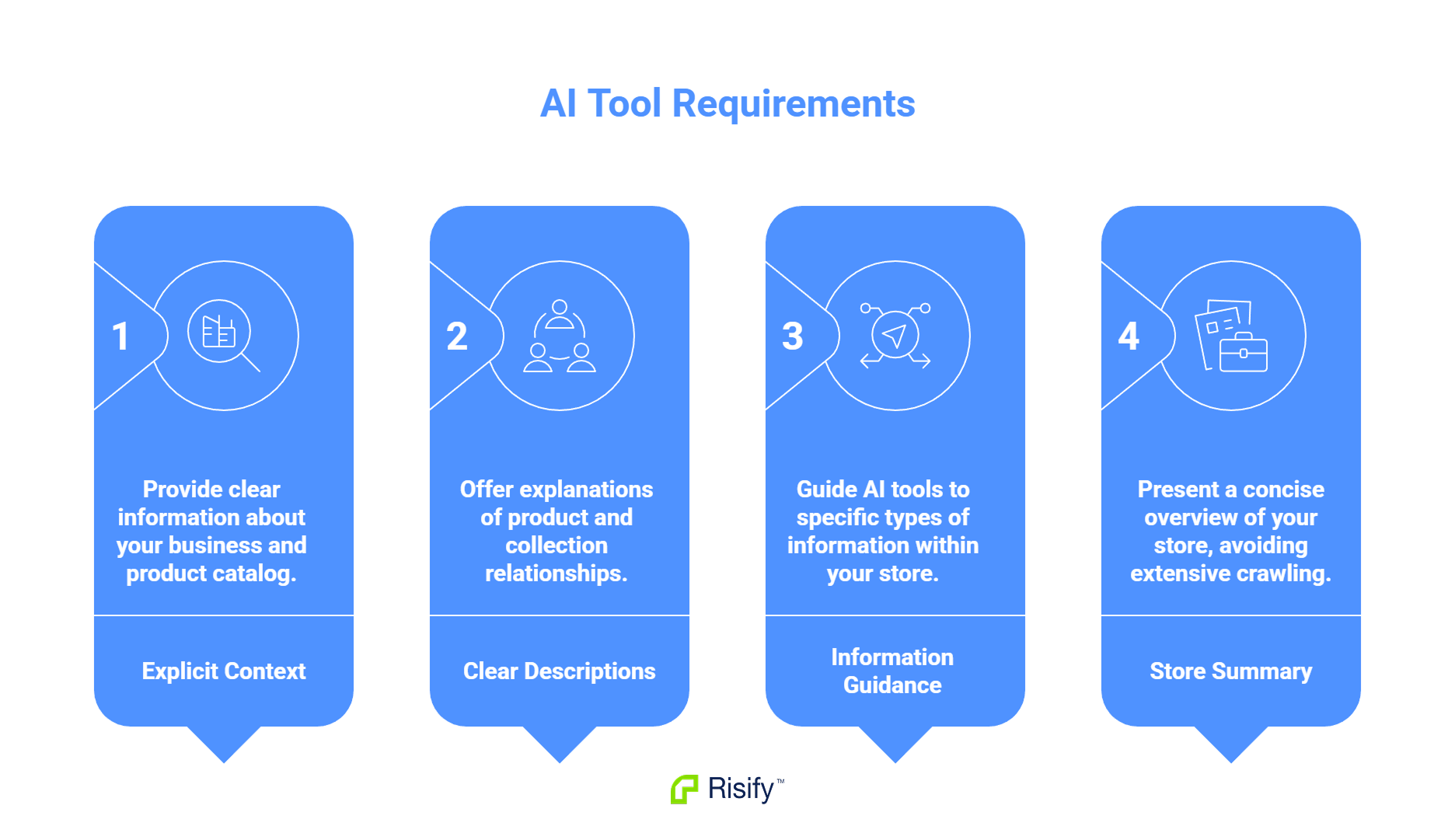

AI tools benefit from:

- Explicit context about your business and catalog

- Clear descriptions of how products and collections relate

- Guidance on where to find specific types of information

- A summary that helps them understand your store without crawling every page

llms.txt provides this context in a format designed for AI consumption.

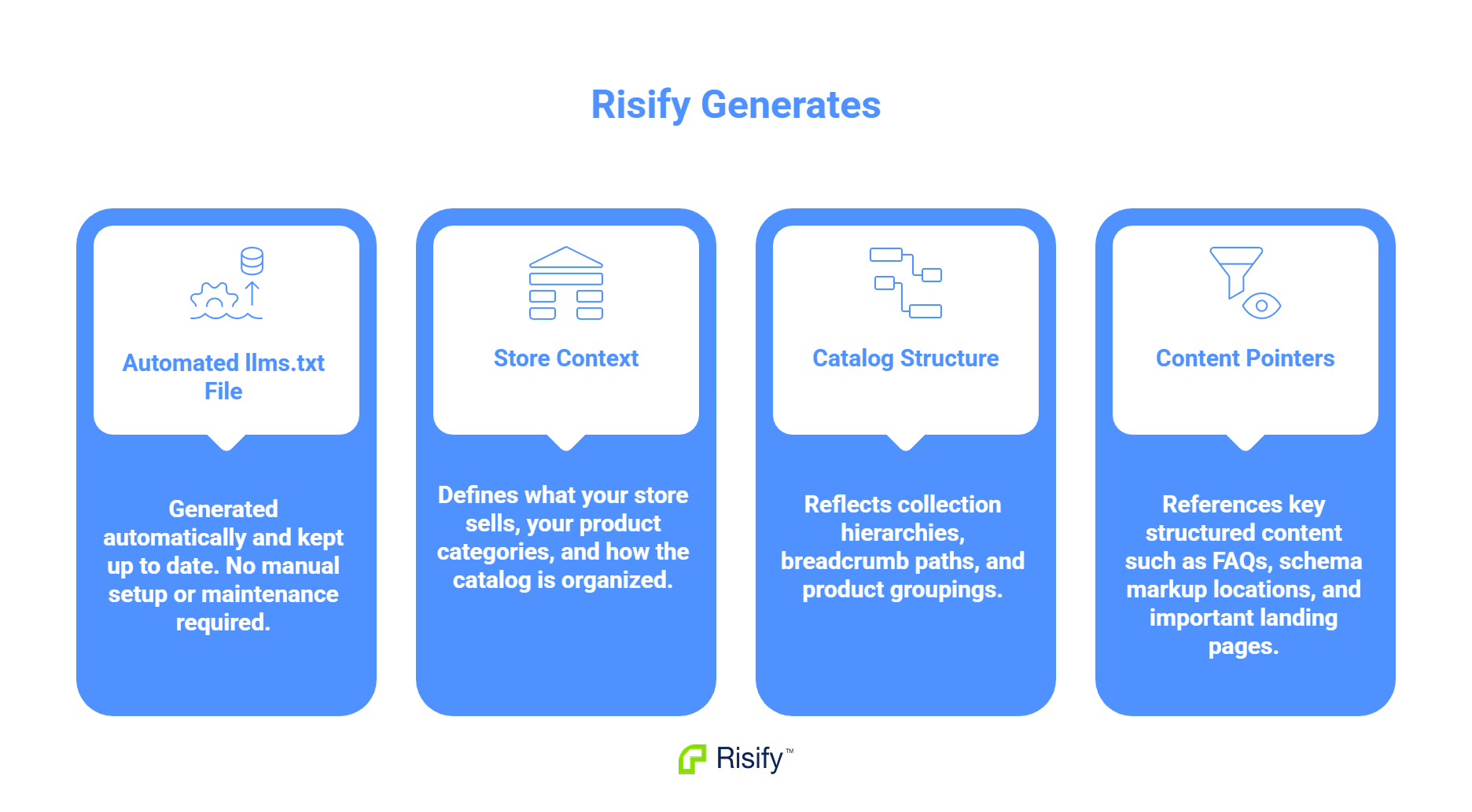

What Risify Generates

Risify generates llms.txt files based on your store structure. You do not need to write or maintain the file manually - it reflects the catalog organization and content relationships you build through Risify's features.

Store Context

The generated file includes information about your business:

- What your store sells

- The categories and product types you offer

- How your catalog is structured

This gives AI systems immediate context about your store without requiring them to piece it together from scattered page content.

Catalog Structure

As you define collection relationships, breadcrumb paths, and navigation structures in Risify, this information is reflected in the llms.txt file. AI systems can understand:

- Which collections are parent categories and which are subcategories

- How products group together

- The hierarchy of your catalog

This structured information helps AI tools recommend relevant products when users ask category-level questions.

Content Pointers

The file includes references to structured content on your site:

- FAQ content organized by product or collection

- Schema markup locations

- Key landing pages

AI systems know where to look for specific types of information rather than scanning your entire site.

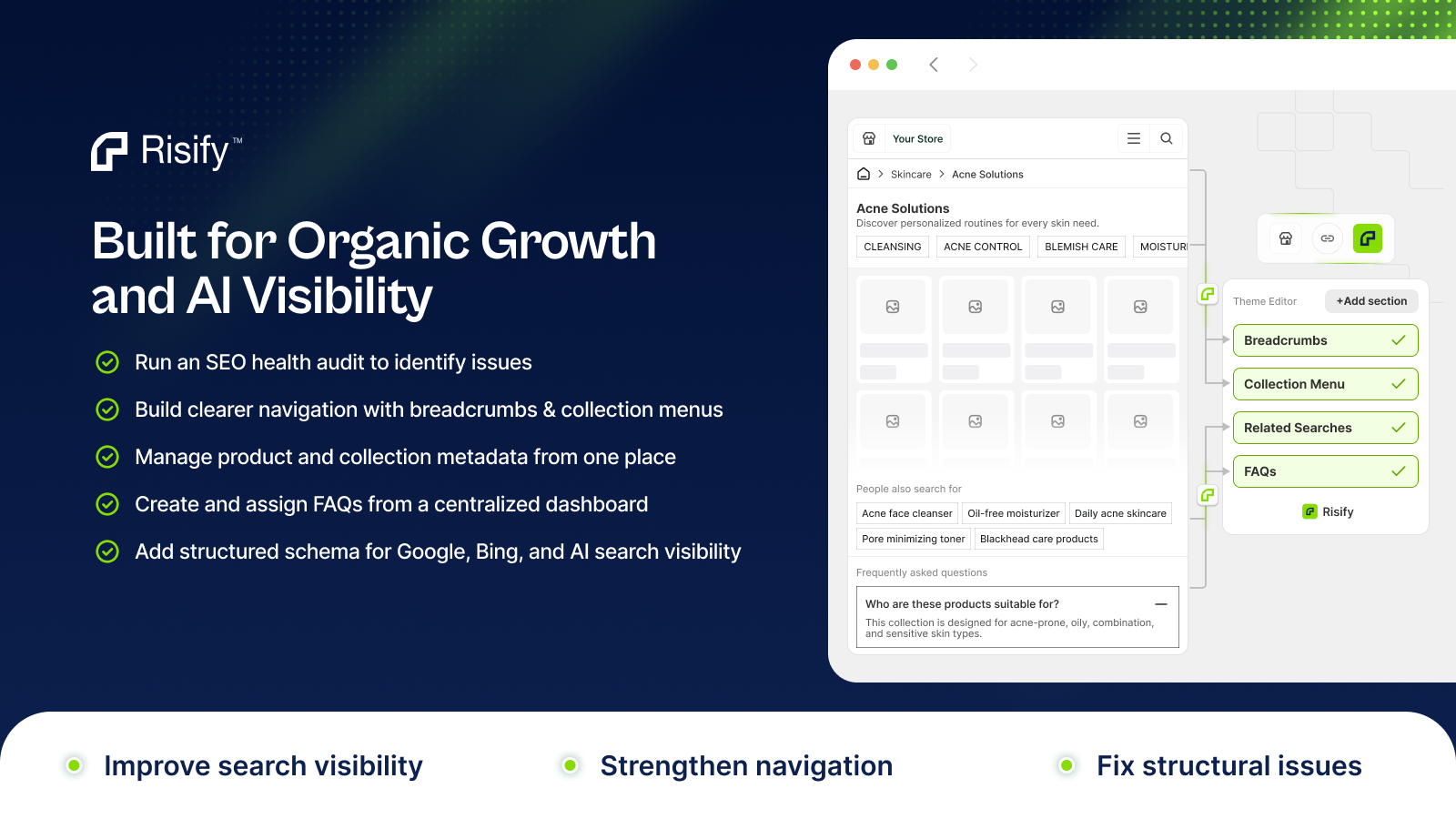

Prepare Your Store for AI Discovery with Risify

llms.txt is an emerging standard that most merchants have not encountered yet. Stores that implement it early give AI tools the context they need to understand and recommend their products accurately.

Risify generates llms.txt files automatically as part of its AI readiness features. As you build your catalog structure and content relationships, the file updates to reflect your store.